Local and Remote LLMs, Airflow, and MLflow

June 25, 2025Summary

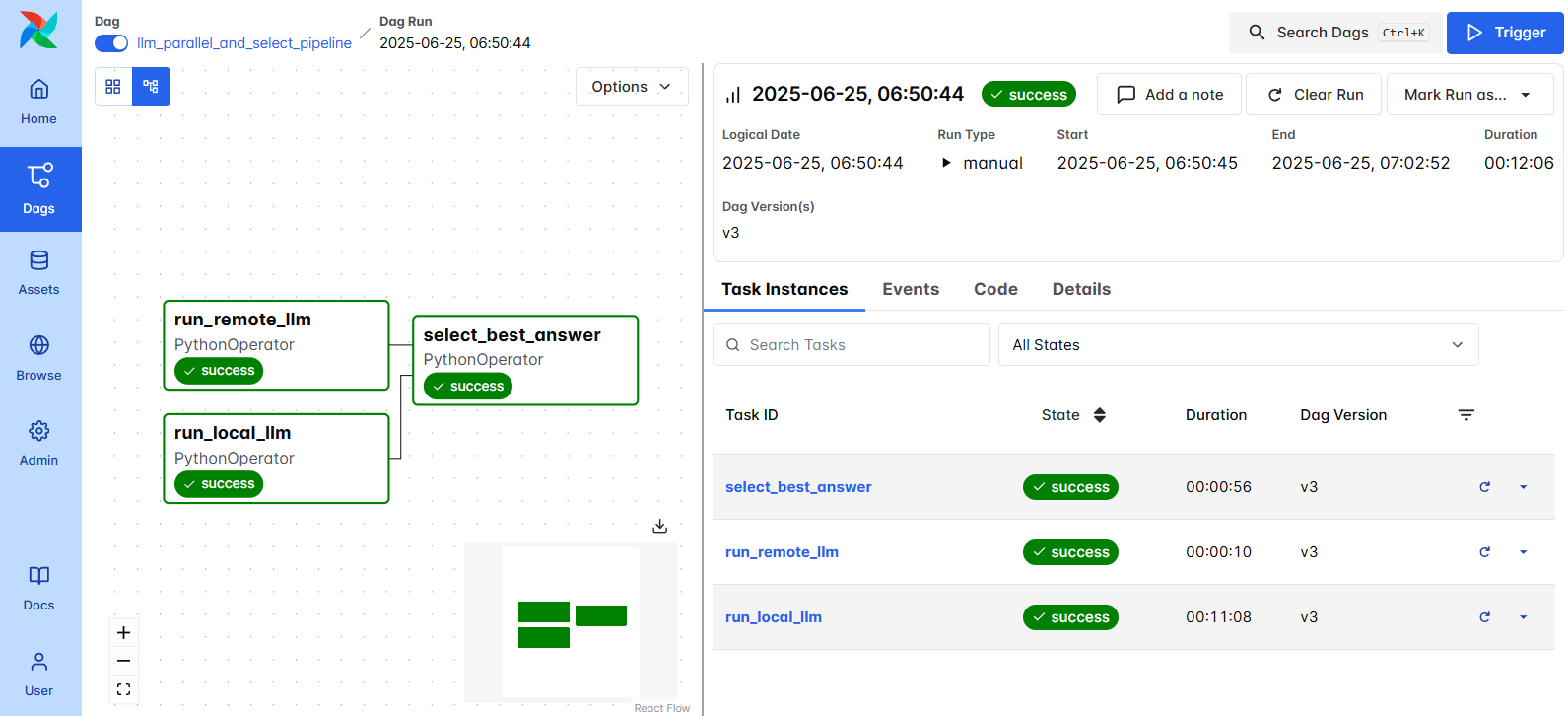

I'm sharing my experience of building an Airflow pipeline that concurrently executes both local and remote LLMs. A judging LLM then selects the best replies from the two. MLflow is used to track experiments and capture LLM replies.

The pipeline executes each local LLM and the remote Gemini model a number of times in a row. All replies are captured by MLflow and then sent to a more powerful LLM to judge and select the best reply among all.

Local LLM

When downloaded the total size of the google/gemma-3-1b-pt is 1.9 GB and the quality of the responses is surprisingly OK and fast on my not-so-powerful computer. The generated output is often weird though:

Prompt:

Tell me about the role of russian coal miners in the American revolutionary war.

Local gemma-3-1b-pt:

Hi there, Russian coal miners were an important part of the American Revolution. Their skills were critical in the industrialization of the United States. They were the first to mine and process coal for steam engines.

Airflow pipeline

The pipeline is simple and self-explanatory, once LLMs finished running, the best answer is selected. It took 11 minutes to execute local LLM inference and the same job took 10 seconds for the API version of the model.

MLflow allows us to collect and visualize metrics. I chose to collect some performance-related ones for local invocation of the LLM.

What did I learn

- Running local LLMs in June 2025 is a painful endeavor unless you have a powerful computer to execute it on.

- MLflow 3.1.0 is great, stable and has an overall intuitive interface (both API and UI) even for somebody who has not much exposure to model management.

- The quality of responses from local LLMs is okay for a set of well-known facts, but the model hallucinates for less-known ones.