Building an MCP-Powered Agent for Bearing Health Monitoring

January 21, 2026Intro

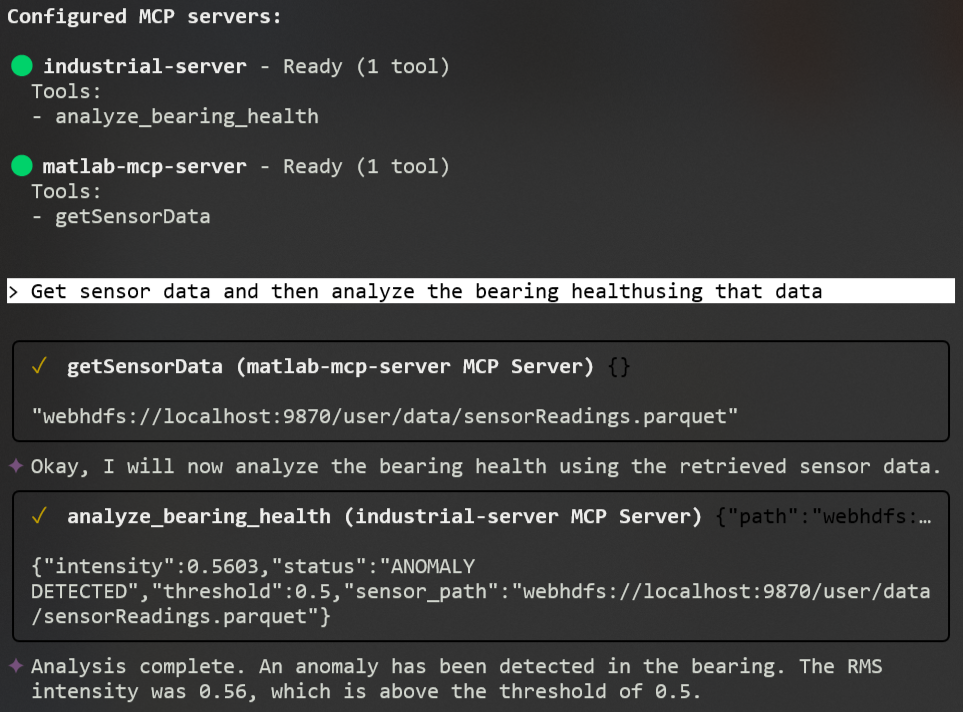

In this post, I’m turning a Gemini agent into an industrial health monitor. By nesting the agent in a sprites.dev sandbox, I’ve got it orchestrating a hand-off between MATLAB (for synthetic data generation) and Python (for health analysis) - all while keeping things efficient by passing file paths instead of bulky data chunks. If you've ever wondered how to make LLMs working with MATLAB, Python, Hadoop and Parquet this workflow is for you.

The workflow and its components

- My

geminiagent runs in the sprites.dev sandbox and it connects to two MCP servers - I send a prompt to retrieve measurement data from my bearings and then analyze that data to asses their health. As a result,

geminisends a request to thematlab-mcp server - The

matlab-mcp-serverhosts thegetSensorDatatool. When triggered, a simple MATLAB algorithm generates data and saves it to Hadoop storage. For simplicity, I'm running both MCP servers and the Hadoop storage on the same machine. The tool returns the URL path to the rather than the data itself - to prevent data being sent back to the agent, and so saving tokens. I'm using MCP Framework for MATLAB Production Server for hosting my MATLAB tool on the MCP server. - Once the

geminiagent receives the URL path to the stored sensor data, it sends a request to myindustrialMCP server, which hosts theanalyzeBearingHealthtool. This tool requires a single input - the URL path to the sensor data. A Python algorithm then processes the data and assess the bearing health. For this server, I am using FastMCP.

Sandbox

I'm using the sprites.dev as a stateful sandbox environnement. Sprites is a recent offering at the time of writing (January 2026). My experience with them has been quite positive; very simple to get started, sprites come with pre-installed gemini-cli and simple, friendly APIs. The downsides - the usage billing was not very transparent - I was charged $7.79 while working on this demo but I'm not sure what the change was for, and I couldn't find any insights in the cost explorer. Another issue was that I couldn't get the gemini-cli to work properly in interactive mode when connecting to the sprite from mobaxterm, other terminals worked great though.

MATLAB MCP Server and getSensorData tool

I had a simple MATLAB algorithm to generate synthetic data with random noise, which is then stored into a Parquet file in Hadoop HDFS storage.

% Construct synthetic vibration signature:

% Component 1: 50Hz fundamental frequency

% Component 2: 120Hz higher-frequency fault harmonic

% Component 3: Random noise scaled by 0.5

sig = sin(2*pi*50*t) + 0.8*sin(2*pi*120*t) + 0.5*randn(size(t));

% --- Data Persistence ---

% Convert signal vector to a table for Parquet compatibility

% Variable name 'vibration' is used for schema consistency in HDFS

T = array2table(sig', 'VariableNames', {'vibration'});

% Write the file to the local working directory

parquetwrite(outPath, T);Using Hadoop is not that fashionable nowadays but it is certainly a low key way to get data shared between MCP tools. Since MATLAB's parquetwrite supports HDFS out of the box, I decided to use it.

Industrial Server and analyzeBearingHealth tool

The analyzeBearingHealth tool receives path to the Parquet file, analyzes the data and assesses the health state (source code).

@mcp.tool()

def analyze_bearing_health(path: str, threshold: float = 0.5) -> dict:

"""

Reads vibration data and judges bearing health based on RMS intensity at 120Hz.

Args:

path: URI or local path to the sensor data (Parquet format).

threshold: RMS intensity limit (default 0.5).

"""

# Load data from HDFS or local filesystem

df = pd.read_parquet(path, engine='pyarrow')

# Calculate intensity at the specific bearing fault frequency (120Hz)

intensity = get_rms_at_freq(df['vibration'].values, fs=2000, target_freq=120)

# Assess health and return the results ...