Automating Airflow pipeline with an LLM Agent

June 19, 2025Summary

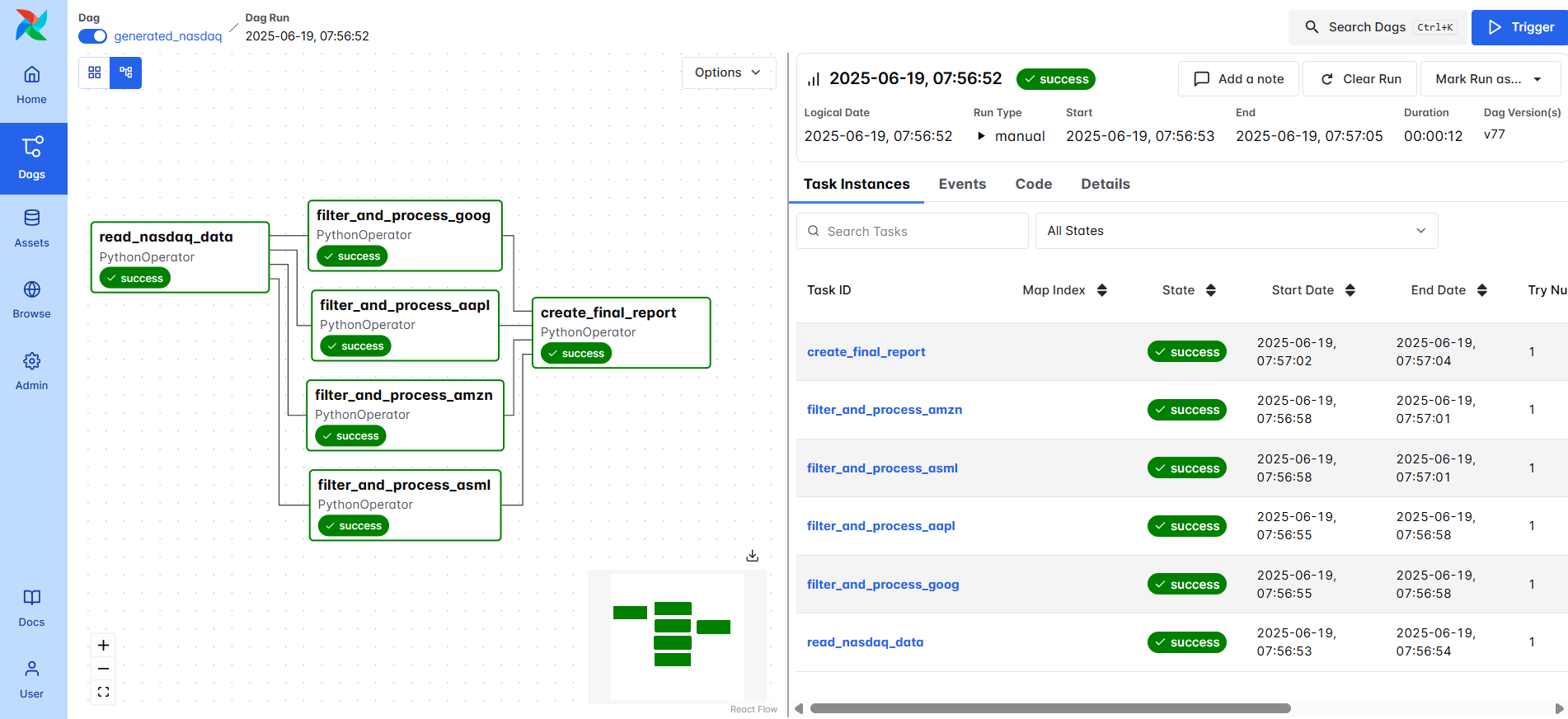

I'm sharing my experience developing an LLM agent that generates and triggers an Apache Airflow pipeline. This pipeline reads data via a REST API call, processes and filters the dataset, calculates moving averages, and finally, generates a static HTML report with plots and tables.

The pipeline itself is simple and could probably be generated with just a few iterations in a chat web app. By running this experiment, I wanted to see how an agent would be able to solve the task by following my prompt. Can it complete the task in one go, learn from its mistakes, recover from errors, and adapt the generated code?

The agent should be able to pick the right tools throughout its lifetime. These tools perform specific actions within the environment, grounding the execution flow and minimizing the chances for the LLM to get off track. The selection of the tool is the responsibility of the LLM.

Why Airflow

Airflow seems to be an excellent tool for defining and orchestrating pipelines. Also, since the task is composed of a set of well-defined steps, it sounds reasonable to translate those into a pipeline.

Why LLM Agents

To kick the tires and get hands-on experience building one.

Results

After numerous attempts and prompt adjustments, the pipeline started running (somehow). It was brittle, and I eventually gave up tinkering with the prompt. In only 3 cases out of 10 could the agent successfully complete the task and generate the report.

Experiment Details

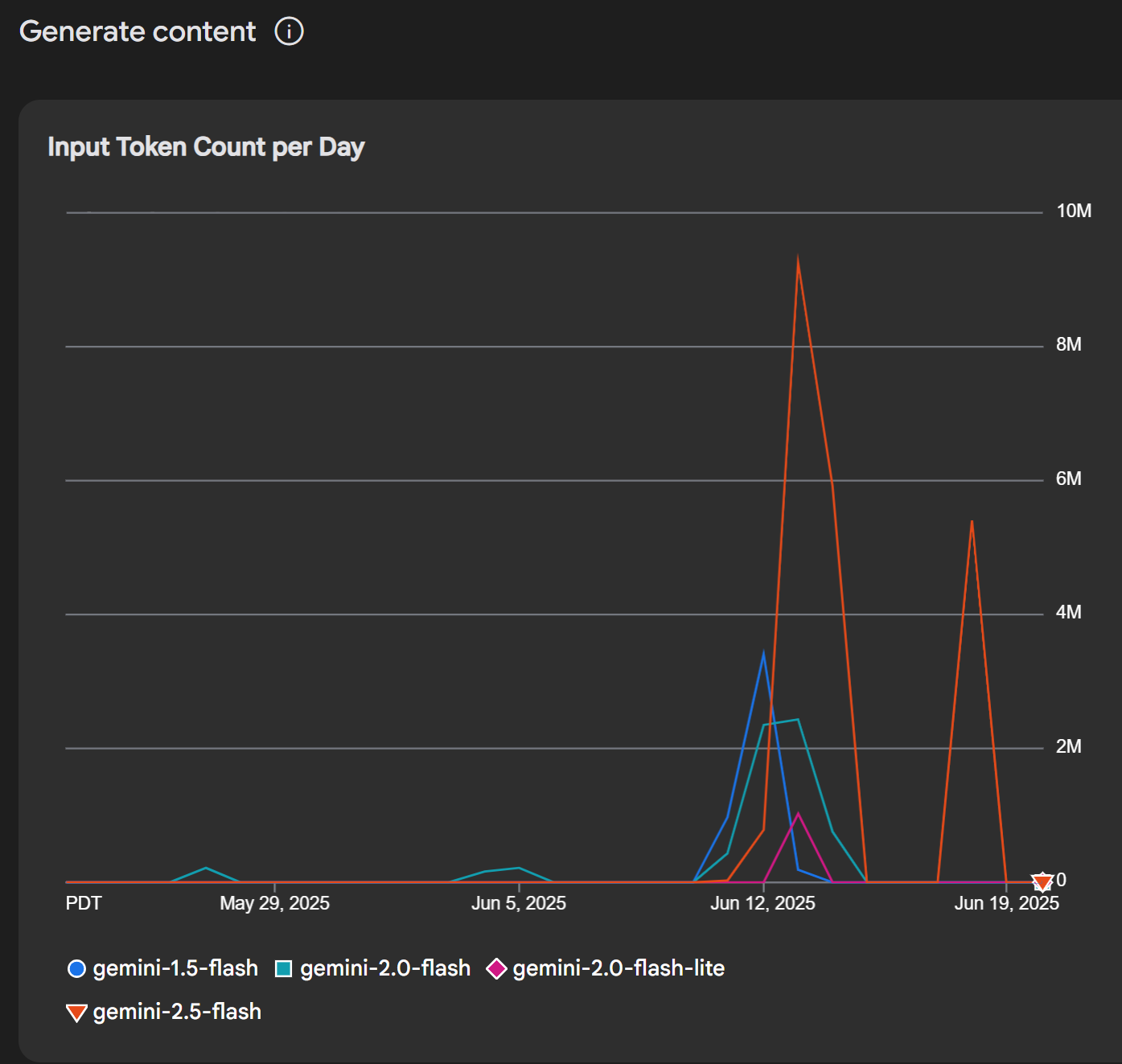

I conducted the experiment in June 2025, used smolagents for workflow orchestration and the gemini-2.5-flash LLM. I spent a total of €2.88 during the entire experiment (source code).

What Did I Learn

The agent was far from giving deterministic results and it was pretty fragile – even tiny changes in the prompt had huge effects on the outputs. It was tough to figure out what was causing those differences: was it because of prompt modifications or just because the agent's output was non-deterministic by default? Would I be better off running several agents in parallel, all with different configurations and prompts, and having an LLM judge pick the winner?

On the positive side, it was nice that the agent could pick up the right tool. I didn't have to be prescriptive in what tool to use when, and it consistently picked the right one.

The LLM’s knowledge cutoff was annoying, as I was using the latest release of Airflow (3.0.2), which was hot off the press. The LLM’s suggestions were based on older versions, so the LLM kept spitting out pipeline properties that were removed in the latest version. I had to adjust my prompt to circumvent this.

Developing agents in June 2025 still demands human presence. Even for this simple application, identifying root causes and devising remedies required my attention.